EXPLAINABILITY

Motivation

Despite achieving high accuracy, AI models can exhibit bias due to their reliance on ‘shortcuts.’ These models learn correlations, but correlations do not necessarily imply causality. To assess whether a model has identified the correct correlations or is biased, the field of eXplainable AI (XAI) has emerged, providing insights into the internal processes of AI.

XAI serves various purposes. When introducing a new product to the market, AI developers and certification entities seek assurance that the model will make decisions for the right reasons. In the event of a critical incident, investigative teams aim to understand why the AI made a mistake. Additionally, some researchers employ explainability techniques to comprehend new physical laws discovered by AI.

Research directions

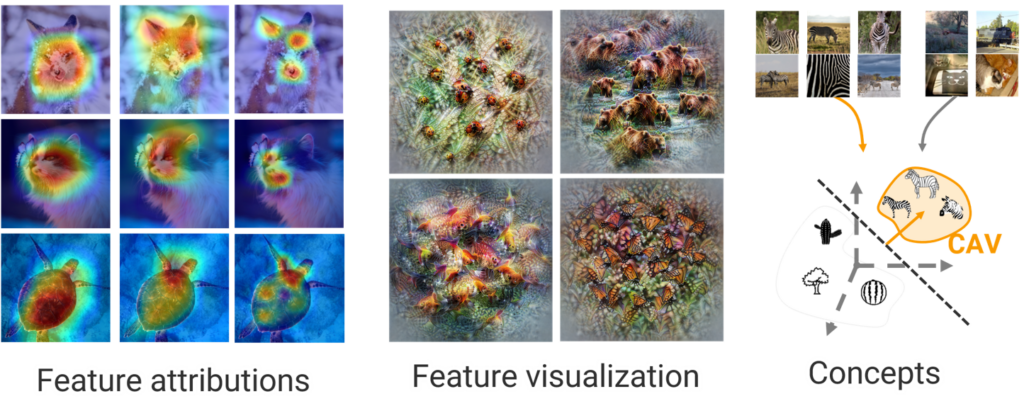

The field of XAI has flourished, offering a diverse variety of methods documented in numerous surveys and taxonomies. The DEEL project has made significant contributions to this field, particularly in three categories of methods:

They elucidate specific model predictions by computing the most critical data features used by the model.

Automatically extract concepts learned by the model and evaluate there importance for each prediction.

Generates a new data samples aiming to be the most representative of a particular propriety (ex : a class).

Researches - Attribution Methods

These methods aims to build an importance map for each input (image pixel or individual words). We proposed two black-box methods grounded within the theoretical framework of sensitivity analysis using Sobol indices [1], and Hilbert-Schmidt Independence Criterion (HSIC) [2] with application in classification, object detection and NLP use cases.

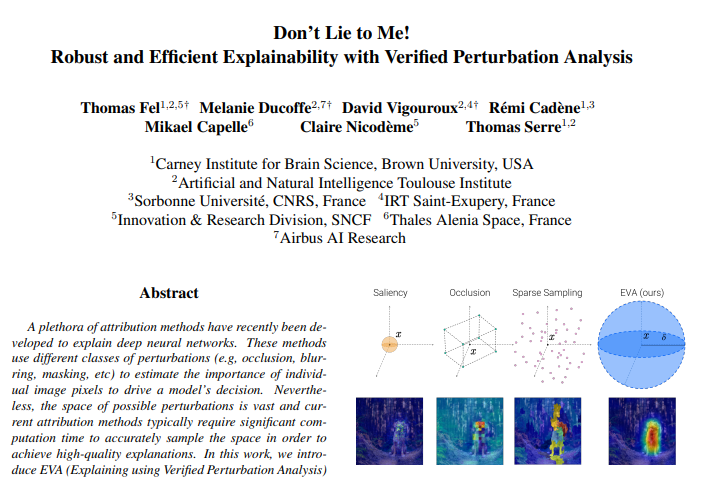

We also propose EVA (Explaining using Verified Perturbation Analysis) [3], a method that exhaustively explores the perturbation space to generate explanations using formal methods.

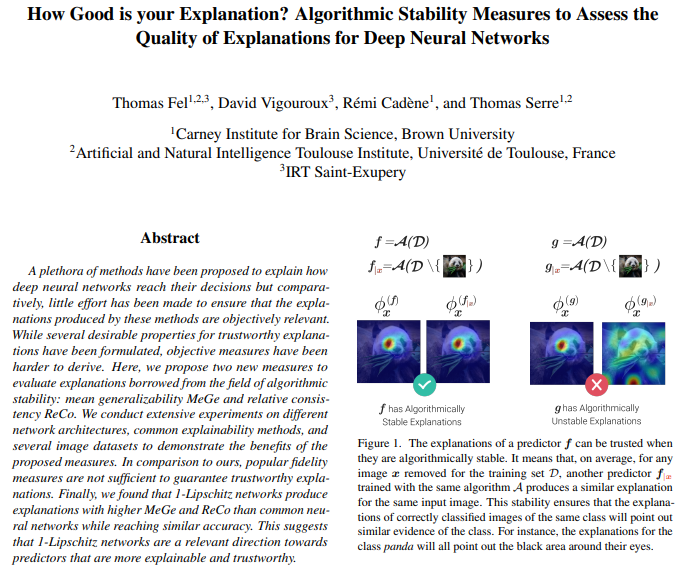

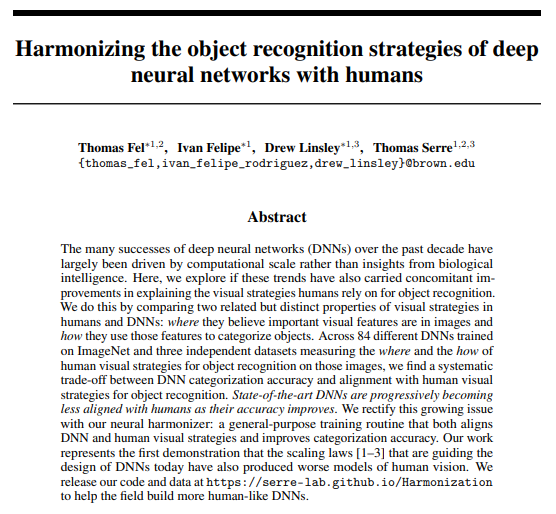

Since attribution methods are subject to confirmation bias, several metrics have been proposed to objectively assess the trustworthiness of explanations. In this field, we proposed two new metrics [4], together with a methodology to evaluate the alignment of the attributions with the human explanations [5], and a training methodology to improve this alignment.

Researches - Feature visualization

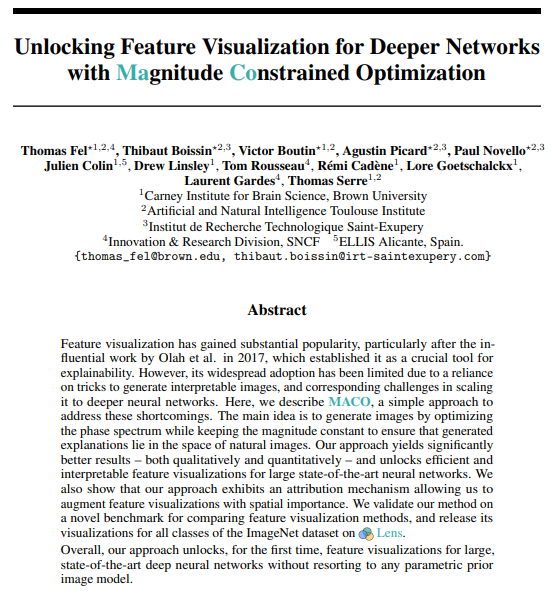

These methods aim to generate synthetic images that elicit a strong response from specifically targeted neurons (or groups of neurons). We proposed a new method [6] called MACO (MAgnitude Constrained Optimization), that unlocks feature visualizations for large modern CNNs.

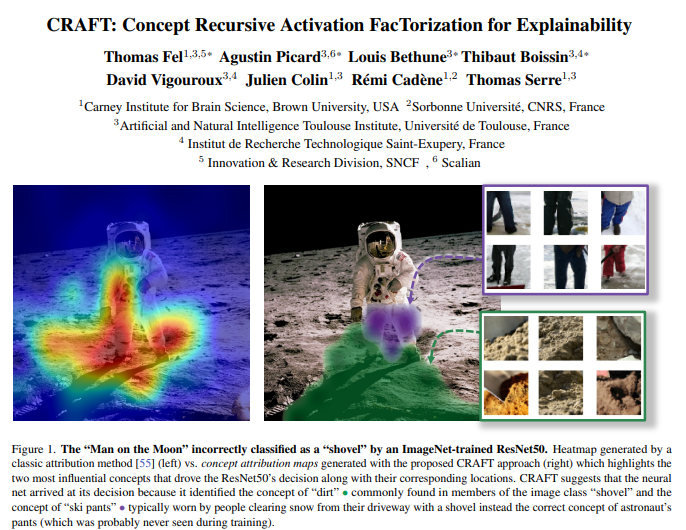

Researches - Concepts based methods

These methods aim to find human-interpretable concepts in the activation space of a neural network to complement the attribution informations. Our contribution to the field, called CRAFT (Concept Recursive Activation FacTorization for Explainability) [7] aims to automate the extraction of high-level concepts learned by deep neural networks based on Non-Negative Matrix Factorization (NMF).

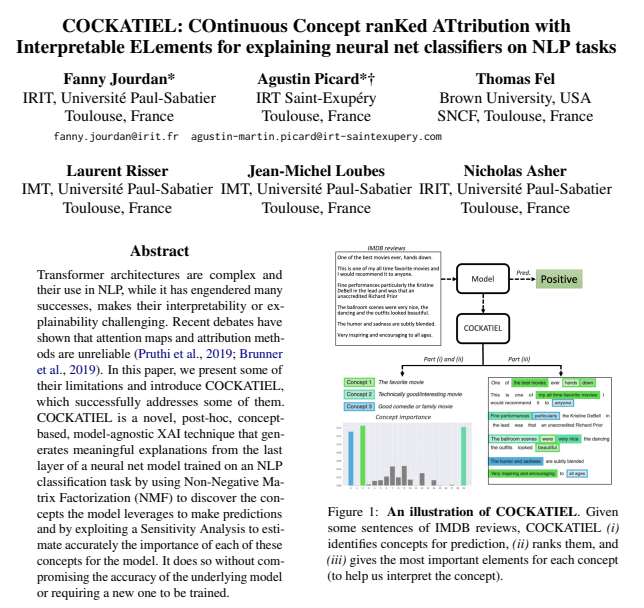

An adaptation to the domain of NLP has also been proposed in [8].

Tool - Xplique

We developed an opensource library for explainability, called Xplique, which includes representative explainability methods with a unified user-friendly interface. It handles a plethora of state-of-the-art methods among the three previsouly described families, and most of metrics. A guideline will accompany soon this library to help industrial users to leverage XAI in their industry.

Main Publications

1. « Look at the Variance! Efficient Black-box Explanations with Sobol-based Sensitivity Analysis », Thomas FEL, Remi Cadene, Mathieu Chalvidal, Matthieu Cord, David Vigouroux, and Thomas Serre, NeurIPS 2021

2. « Making sense of dependence: Efficient black-box explanations using dependence measure», Paul Novello, Thomas FEL, and David Vigouroux, NeurIPS 2022

3. « Don’t lie to me! robust and efficient explainability with verified perturbation analysis», Thomas Fel, Melanie Ducoffe, David Vigouroux, Rémi Cadène, Mikaël Capelle, Claire Nicodème, and Thomas Serre, CVPR 2023

4. « How good is your explanation? Algorithmic stability measures to assess the quality of explanations for deep neural networks», Thomas Fel, David Vigouroux, Rémi Cadène, and Thomas Serre, WACV 2022

5. « Harmonizing the object recognition strategies of deep neural networks with humans», Thomas Fel, Ivan Felipe, Drew Linsley, and Thomas Serre, NeurIPS 2022

6. « Unlocking feature visualization for deeper networks with magnitude constrained optimization », Thomas Fel, Thibaut Boissin, Victor Boutin, Agustin Picard, Paul Novello, Julien Colin, Drew Linsley, Tom Rousseau, Rémi Cadène, Laurent Gardes, and Thomas Serre, preprint

7. « Craft: Concept recursive activation factorization for explainability», Thomas Fel, Agustin Picard, Louis Béthune, Thibaut Boissin, David Vigouroux, Julien Colin, Rémi Cadène, and Thomas Serre, CVPR 2023

8. « COCKATIEL: COntinuous concept ranKed ATtribution with interpretable ELements for explaining neural net classifiers on NLP», Fanny Jourdan, Agustin Picard, Thomas Fel, Laurent Risser, Jean-Michel Loubes, and Nicholas Asher, ACL 2023