UNCERTAINTY QUANTIFICATION

Motivation

Most machine learning (ML) and deep learning (DL) models can be regarded as black-box models providing predictions without including a notion of uncertainty associated to said predictions. Even for the task of classification, where the softmax output of a typical ML model can be interpreted as a measure of uncertainty of the prediction, the softmax values are usually highly overconfident and ill-calibrated. The lack of a proper uncertainty quantification in ML models is one of the key elements that hinders their adoption in critical applications. It is also a major obstacle to the associated certification process. Predictive Uncertainty Quantification (UQ) is the field of research that deals with quantifying the uncertainty in the predictions of neural networks and other ML models. It aims at building trustworthy ML models, by providing not only point-predictions, but also a notion of the uncertainty associated to these predictions. Based on these uncertainty estimates, users can safely decide when to trust and when not to trust the predictions of the model.

Research directions

Research in the field of Uncertainty Quantification is rich and varied; the DEEL project contributes to the research in UQ in the following subfields. Our contributions to these fields are twofold: methodological works on the one hand (for various safety-critical tasks), and a new library on the other hand.

Conformal Prediction is a series of post-processing, model-agnostic techniques that aim at providing prediction sets instead of point-predictions. These prediction sets are built based on held-out calibration data, and have rigorous finite-sample probabilistic guarantees: given a user pre-defined error rate, the prediction sets are guaranteed to contain the true output value most of the time, and fail with a rate lower than the pre-defined error rate.

A prediction provided by a model may be correct or incorrect, but not all types of errors are equally severe. How severe an error is can be encoded via a loss function. As Conformal Prediction, risk control techniques also aim at providing prediction sets instead of point-prediction. Given a user pre-defined risk level, the prediction sets are guaranteed to have an expected loss that remains below the maximum risk level tolerated by the user.

Researches - Conformal Prediction

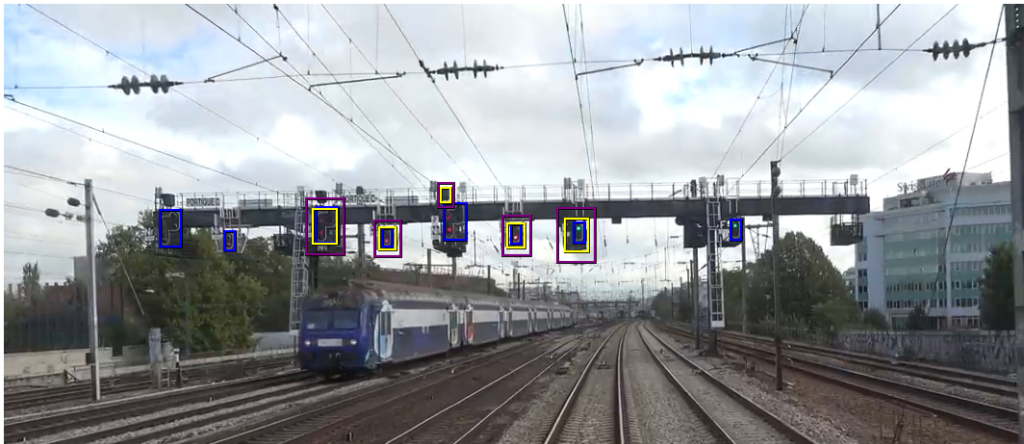

Providing reliable uncertainty quantification for complex visual tasks such as object detection is of utmost importance for safety-critical applications such as autonomous driving, tumor detection, etc. In the article [4] we show how to apply conformal prediction methods to the task of object localization, and illustrate our analysis on a pedestrian detection use-case. This line of research is further developed in the paper [2] were a railway detection dataset is built for the object detection task and the effect of applying a new multiplicative score is explored.

The paper [5] studies a safety-critical prediction problems for which a reference model (ground truth) is approximated with a neural network surrogate, but where underestimating the ground truth has a dramatic impact. Using Bernstein-type concentration inequalities, we show how to evaluate and modify the surrogate so as to overestimate the ground truth with high probability. In particular we apply these tools to an example from the aviation domain.

Researches - Risk Control

The paper [1] explores how to apply conformal risk control to the task of object detection. By comparing the effect of different correction functions and score relaxations we manage to generate tighter prediction sets for a variety of models.

Tool - PUNCC

We develop an opensource library for Conformal Prediction, called PUNCC, which provides state of the art Conformal Prediction algorithms for a variety of ML tasks: classification, regression, anomaly detection, object detection… It includes multiple evaluation metrics and plotting tools, as well as several tutorials to help the users familiarize with the library. In [3], we present the design of PUNCC and showcase its use and results with different CP procedures, tasks, and machine learning models from various frameworks.

Main Publications

1. « Confident Object Detection via Conformal Prediction and Conformal Risk Control: an Application to Railway Signaling », Léo Andeol, Thomas Fel, Florence de Grancey, Luca Mossina, COPA 2023

2. « Conformal Prediction for Trustworthy Detection of Railway Signals », Léo Andéol, Thomas Fel, Florence de Grancey, Luca Mossina, AAAI Spring Symposium 2023: AI Trustworthiness Assessment

3. « PUNCC: A Python Library for Predictive Uncertainty Calibration and Conformalization », Mouhcine Mendil, Luca Mossina and David Vigouroux, COPA 2023

4. « Object Detection with Probabilistic Guarantees: A Conformal Prediction Approach », Florence de Grancey, Jean-Luc Adam, Lucien Alecu, Sébastien Gerchinovitz, Franck Mamalet and David Vigouroux, best paper award WAISE@SAFECOMP 2022

5. « A High-Probability Safety Guarantee for Shifted Neural Network Surrogates », Mélanie Ducoffe, Sébastien Gerchinovitz and Jayant Sen Gupta, SafeAI 2020